by Douglas Yeo

NOTE: I am periodically updating this article with new information about artificial intelligence and the trombone as it becomes available.

I first heard of ChatGPT in an article in The Atlantic by Daniel Herman, “The End of High-School English” (December 9, 2022). He wrote:

Teenagers have always found ways around doing the hard work of actual learning. CliffsNotes date back to the 1950s, “No Fear Shakespeare” puts the playwright into modern English, YouTube offers literary analysis and historical explication from numerous amateurs and professionals, and so on. For as long as those shortcuts have existed, however, one big part of education has remained inescapable: writing. Barring outright plagiarism, students have always arrived at that moment when they’re on their own with a blank page, staring down a blinking cursor, the essay waiting to be written.

Now that might be about to change. The arrive of OpenAI’s ChatGPT, a program that generates sophisticated text in response to any prompt you can imagine, may signal the end of writing as a gatekeeper, a metric for intelligence, a teachable skill [emphasis added].

The end of writing. As a researcher, writer, and college professor, this got my attention. I found another article in The Atlantic, this one by Stephen Marche (December 6, 2022). It has a bold title: The College Essay Is Dead.

These are breathless, panicky assertions. What is this?

One of the things that some people find appealing about artificial intelligence (AI) programs like ChatGPT is that they may help people generate writing that is far better than what they could produce themselves. Feed ChatGPT your poorly constructed text and—voilà!—AI will make you sound like a college professor with a PhD who has published 100 books.

Does it work? Are these breathless, panicky assertions true?

Two things are at play here: quality of writing, and quality of writing with quality information. I decided to put ChatGPT to the test. I signed up for an account (that took one minute; it’s free at this time, although eventually the platform will be monetized) and started my experiment.

I decided to start with a piece of bad writing. I imagined I was a student who was taking a college-level introduction to music class. The teacher asked the students in the class to write an essay about any musical instrument and I chose the trombone. The teacher didn’t want an essay with footnotes; she wanted more of an “impression paper.” I wrote a few poorly constructed sentences and asked ChatGPT to “fix this and make it better.” Here’s what ChatGPT did with my pathetic writing. The image below is a screenshot of the result and in all of the examples below, the text I inputted is preceded by an icon with DO (for Douglas) and ChatGPT’s suggestion is next to the green OpenAI/ChatGPT logo icon.

ChatGPT fixed my punctuation, removed my colloquial language, and gave my writing an air of sophistication. But it’s obvious that there are tradeoffs. It did not address my comment about a trombone player on Star Trek and one in professional wrestling. My little essay mentioned that the trombone was invented “a long time ago” but ChatGPT ignored that phrase and didn’t write about the trombone’s origins. When I wrote about “a trombone with buttons,” ChatGPT failed to recognize that I was referencing a valve trombone. Some of the limitations of what ChatGPT can do are obvious.

Let’s look at an established piece of writing, one that has been widely derided as “an atrocious sentence.” That judgment comes from many critics over the years, and it has even been enshrined in the Bulwer-Lytton Fiction Contest, an annual contest where over 10,000 people vie to write a dreadful opening sentence. The contest’s title comes from the opening line of Sir Edward Bulwer-Lytton’s novel (1830), Paul Clifford:

The line was immortalized by cartoonist Charles Schultz in countless comic strips that featured Snoopy, who frequently appeared in panels where he was writing a novel (this. below, is the first such appearance, August 27, 1969):

Actually, I’m not so sure this is a terrible sentence. It certainly is evocative. It is long, but that is part of what keeps the reader’s attention. The scene is very clearly described and when I read this sentence, I can feel the wind and rain against my window. Others may disagree, but I’ve always felt the criticism of Bulwer-Lytton’s opening line is a little unfair. I asked ChatGPT to improve on Bulwer-Lytton’s sentence. Here’s what I got:

Is this really better? Gone are the evocative words—torrents, violent, rattling, fiercely, swept, scanty. One sentence has become three, the flow interrupted by periods. I don’t see this as an improvement; it lacks any true literary sense. It sounds stiff, generic, unimaginative, juvenile.

Next, I wanted to see what ChatGPT would do with a piece of excellent writing. I turned to an essay by Jacques Barzun, one of the great philosophers and scholars of the twentieth century (Barzun died in 2012 at the age of 104). I have admired his work for many years. Barzun wrote a major biography of one of my favorite composers, Hector Berlioz (Berlioz and the Romantic Century, 2 volumes, Atlantic Little, Brown, 1949), and it was Berlioz and the serpent that led to my having some memorable, personal engagement with Barzun. He was also known as a superb writer, and his essay about copy editors, “Behind the Blue Pencil: Censorship or Creeping Creativity?” (The American Scholar, Summer 1985, Vol. 54, No. 3, pp. 385-388), is a tour de force. Barzun opines, in his pithy prose, that copy editors often mangle an author’s intent as they try to “improve” an author’s writing. I thought I would ask ChatGPT to fix and improve two paragraphs from Barzun’s article. Here’s what I got:

Again, I cannot argue that ChatGPT’s work is an improvement—on any level—on Barzun. The elimination of the phrase in scare quotes, “first-rate stuff but needs a lot of work,” is an egregious omission, but ChatGPT reorders words and offers substitutes for many words that eviscerate Barzun’s writing and make him sound like a middle school student who thought that using a few big, fancy words—like “proliferation”—would impress the teacher.

But there’s more, in this next paragraph by Barzun:

The problems we see are obvious and herein lies one of the cautionary lessons for anyone who is hoping that AI will replace the need for an individual to think through both information and sentence structure. Every writer has an individual voice. When a copy editor (ChatGPT is, in one respect, a virtual copy editor) changes an author’s prose, it often comes out in the voice of the editor. The author’s voice is lost. ChatGPT’s editing lacks sophistication and it does not process irony or turns of phrases that make up interesting, engaging prose. Barzun’s superb writing—keep in mind that Barzun’s book, Simple & Direct: A Rhetoric for Writers, is one of the great texts about writing style—has been reduced to the very kind of writing that he deplored.

While some commentators are praising ChatGPT as a tool to help students who struggle with writing to put together well crafted sentences, others see it as a dangerous, quick end around for a student to avoid doing research. In recent years, “I got it from the Internet,” is an answer that no teacher wants to hear from a student who is asked to justify a fact or assertion. But what if ChatGPT does the research for you and puts together paragraphs that are well structured, clear, accurate, and informative? This is the thing that is making many scholars, teachers, and commentators a little wobbly. What if AI will do your research for you?

While the idea of a computer writing text that sounds like was written by a human being is very impressive, the more important question is “How does ChatGPT get its information?” Putting together grammatically correct and natural sounding sentences is one thing. Putting together grammatically correct and natural sounding sentences that make sense is something very different. I thought I would go to the source. I asked ChatGPT:

There we have it. ChatGPT relies on “large datasets of text.” Where does it get those texts? The Internet, including publicly available books and articles. ChatGPT does not search the Internet when a user makes a query. Rather, it relies on huge databases of information gathered from the Internet that have been loaded into its database. It works from what it has been told. From there, ChatGPT puts together answers to questions and writes those answers in accordance with its own grammar and sentence construction algorithms.

Do you see what I see? I decided to ask ChatGPT to do my work, to answer some questions as if I was a student writing a paper.

First, I asked ChatGPT to write a history of the trombone. Any student would be disappointed that I only got three short paragraphs. But, have a look:

The first problem, as every trombone professor will recognize, is the first sentence. Does it sound familiar? It should. ChatGPT, for all of its highly touted creativity and originality, begins its history of the trombone by lifting—let’s call it for what it is: plagiarizing—the first sentence of the Wikipedia entry on the trombone:

If a student begins an assignment with this sentence, the result is guaranteed: a grade of F. But there are other obvious problems. To say that the trombone is an instrument that “[dates] back to at least the 15th century” implies that it might have been around earlier, in, say, the 14th century. But there is nothing in the historical record that implies the instrument we would recognize as a trombone was around in the 14th century (1300s). The third sentence says that the slide is used to “produce different notes” but there is no mention of the use of air, the embouchure, a mouthpiece, and how the embouchure changes shape when the trombone produces different notes. It is air AND the slide AND the embouchure that allow for production of various pitches. Was the trombone “originally used in outdoor brass bands and military ensembles”? No. The earliest known use of the trombone (“originally”) is traced to alta (loud instrument) bands from the fifteenth century that included trombones, trumpets, shawms, and bombards (a double reed instrument). These were hardly “brass bands.” ChatGPT states that the trombone was used in opera “in the 19th century” which is true, but we know that trombones were used by Claudio Monteverdi in his opera, Orfeo, that dates from 1607, the seventeenth century. ChatGPT gives the impression of presenting information with authority. But much of what it wrote about the history of the trombone is incomplete at best and untrue at worst.

I decided to ask ChatGPT to write the answer to a question I posed to a student on a doctoral (DMA) comprehensive exam when I taught at Arizona State University. I asked the student to craft lists of orchestral excerpts for a tenor or bass trombone symphony orchestra audition, then provide commentary on why these particular excerpts were selected. I asked for an audition list for a preliminary, semi-final, final, and final with section playing round, four lists in all. When I asked ChatGPT, this is what I got:

This is rubbish. Pure, unadulterated word salad with no basis in truth. Whatever data was fed into ChatGPT’s databases about the use of the trombone in orchestral literature, AI put it together and came up with junk. ChatGPT obviously does not know a thing about orchestral excerpts for trombone. It all sounds very erudite and informed. But apart from the commentary about the Symphony No. 3 of Camille Saint-Saëns (yes, that symphony does have a “lyrical trombone solo that requires a player to have a strong sense of phrasing and warm, full sound”), there is not a single sentence in this answer that is based in fact. For instance: Not only is there no trombone solo that features legato, a good sense of phrasing, and musicality in the first movement of Beethoven’s Symphony No. 5, the trombones do not play AT ALL in that movement. There is no trombone solo in the second movement of Brahms’ Symphony No. 2. The second movement of Beethoven’s Symphony No. 9 does have trombones but there are no trombone solos. The first and second trombone parts have 33 notes each (the third trombone has 34) in the movement and none of them are soloistic. Likewise, there is no lyrical trombone solo in the second movement of Tchaikovsky’s Symphony No. 6 (that’s the waltz movement in 5/4 meter), and the second movement of Dvorak’s Symphony No. 9 contains two beautiful chorales for trombones and tuba, but there is nothing therein that could be characterized as “rapid, virtuosic passages.” ChatGPT didn’t know anything about the trombone in orchestral music and instead of telling me, “I don’t know,” it spewed out nonsense.

Exhausted from making up stuff about trombone auditions, ChatGPT strained to create a list for a semi-final round. The black box you see in the screenshot above was blinking on my computer for several minutes as ChatGPT was “thinking.” The box finally stopped blinking and it was clear that no further answer was forthcoming. ChatGPT, like the Grinch who stole Christmas, “puzzled and puzzled ’till his puzzler was sore.” Someone will paste this into an assignment and hand it in. Grade: F.

Wanting to see if this kind of nonsense is normative with AI—remember, AI does not think, it only processes information (and dis-information)—I asked ChatGPT another question. This time I asked it if Beethoven’s Symphony No. 5 has a long solo for bass trombone. Here’s the answer ChatGPT generated:

I would love to see the citations for this. As any experienced trombone player who lives in the orchestral universe can tell you, there are three trombone parts in Beethoven’s Symphony No. 5. But none of them play in the first movement. The only movement where trombones play in the symphony is the final movement, Allegro, as one can see from this incipit (below) of the bass trombone part for the symphony. Trombones do not play in the first movement, Allegro con brio. Tacent (tacet) means the instrument is silent:

ChatGPT invented a solo for bass trombone in the first movement of the symphony. Then, it waxed eloquently about specific aspects of this alleged solo. It all sounds so plausible. And it would have been quite wonderful if Beethoven HAD written a solo for bass trombone in the symphony, a solo that, according to ChatGPT “is a memorable and iconic moment in the symphony.” But ChatGPT’s answer does not contain a word of truth. By now, readers should be getting concerned about the dangers of ChatGPT and similar AI programs. Because they do not provide citations, there’s no way to know where they get the information from which they craft their nonsense. More than a few people will assume that what ChatGPT writes is reliable and truthful. It often is not. It can only parrot what it has been fed. And without citations, there is no way to know if it’s been fed truth or nonsense. And here is something else: I cannot imagine there is any source on earth that says that there is a long bass trombone solo in the first movement of Beethoven Symphony No. 5 unless it appeared in MAD magazine. Here, when asked a question for which it did not know the answer, ChatGPT did not say, “I don’t know.” It made up stuff. This is dangerous. Here is another grade of F. And if ChatGPT makes up stuff about medicine, what happens if someone dies as a result of its mis- and dis-information? It will happen.

We are already seeing that artificial intelligence makes up stuff. Tech writers refer to this as “hallucinating.” ChatGPT hallucinated about the trombone in Beethoven’s Symphony No. 5. But maybe I was a little too specific. A few months later (April 2023), I decided to ask ChatGPT another question about the bass trombone and Beethoven. Instead of asking if Beethoven wrote a solo for bass trombone in his Symphony No. 5, I asked if Beethoven wrote a solo for bass trombone in ANY of his symphonies. Here’s what I got:

Really? This is a hallucination of the first order. Let’s unpack this. ChatGPT received some new information since I first asked it about a solo for bass trombone in Beethoven’s Symphony No. 5. My guess is that others asked ChatGPT the same question and then provided feedback to ChatGPT that its answer was wrong. As we can see above, ChatGPT now says that Beethoven did NOT write a solo for bass trombone in his Symphony No. 5. Good on you, AI! That said, the reason Beethoven didn’t write a solo for bass trombone is NOT because “the bass trombone did not become a standard member of the symphony orchestra until the mid-19th century, after Beethoven’s death.” Beethoven DID use the bass trombone in his Symphony No. 5; he just didn’t write a solo for the instrument.

But ChatGPT was not content simply to offer the answer to my question. Like a pompous fool that loves to hear the sound of their own voice, ChatGPT hallucinated and made up more nonsense about Beethoven’s use of the trombone. NO: Beethoven does not use the trombone to “represent the voice of God” in the third movement of his Symphony No. 9. The trombone doesn’t even play in the third movement of the Ninth Symphony. Further, Beethoven did not use the tempo marking sehr feierlich in the movement (the movement’s tempo marking is Adagio motto e cantabile). Here’s another whopper. NO: It is utterly false to say “the entire trombone section is featured prominently in a section marked ‘Trombone Scherzo'” in the fourth movement of Beethoven’s Symphony No. 5. There is no section marked “Trombone Scherzo” in the symphony. Further, there is no “rapid, staccato playing” required from the trombones in the fourth movement of the Fifth Symphony.

But wait, there’s more! ChatGPT said that “Beethoven also wrote several chamber works that feature trombone solos.” Hmmm. Beethoven wrote exactly ONE chamber work for trombones, his Equali for four trombones, WoO 30. You can read all about this piece—three short movements in chorale style—in Howard Weiner’s excellent article, “Beethoven’s Equali (WoO 30): A New Perspective,” published by the Historic Brass Society Journal in 2002. There are no “trombone solos” in the Equali. Not content with hallucinating about the multiple chamber works that Beethoven wrote for trombone, ChatGPT then turned to Beethoven’s Sonata for Horn and Piano “which is often played on trombone.” First, the title of the piece is Sonata for Piano and Horn. ChatGPT then went on to hallucinate about how “the sonata features a beautiful and lyrical trombone solo in the second movement.” That’s like saying Johann Ernst Galliard wrote a beautiful solo for trombone—which he didn’t—although trombone players often play Galliards sonatas that he wrote for bassoon or cello in transcriptions for trombone by Keith Brown and others.

Finally, ChatGPT puts a dagger in its hallucinations about Beethoven and the trombone when it said Beethoven “did include several notable trombone solos throughout his works.” If Beethoven had done so, trombone players would be so very grateful to the great composer. But he did not. Rely on ChatGPT for information about the trombone for that term paper you’re writing and you will once again receive grade of F.

I decided to take this further and ask ChatGPT some questions about other aspects of the trombone and how it was used. Here is another question I asked on a doctoral comprehensive exam when I taught at Arizona State University. I asked ChatGPT about the differences between the early trombone (sackbut) and the modern trombone:

Once again, ChatGPT offers a combination of attractive appearing prose along with many factual errors. No, the trombone’s bell does not point upwards, nor does the inner slide move in and out (every trombone player knows it’s the outer slide that moves in and out). Of course, just moving the slide along isn’t the only way the trombone changes pitch—air and the embouchure are in play as well. When ChatGPT wrote the absurd sentence, “The early trombone had a single slide and the modern trombone has a double slide,” I had to ask myself, “Where did it get this information?” To which I followed up with this thought: “Someone is going to trust this nonsense.” (And I had another thought: We need more and better excellent research about the trombone. If ChatGPT is relying on currently available information, it needs better information. Much, much better information.)

I asked my friend, Trevor Herbert, one of the leading trombone scholars in the world (his book The Trombone is our instrument’s seminal book, and he received the International Trombone Association’s Lifetime Achievement Award in 2021) what he thought of ChatGPT’s take on the early and modern trombone. Trevor said:

Students and their teachers should understand that the basis of proper historical narratives reside in the analogue world. It is not the job of AI machines to arbitrate intellectual authenticity.

So the bottom line is that we just plough on with what we do and try to make it is as good as possible. The accurate application of the semantic web as the basis of an imaginative tool is some way down the line. I would never use these sites nor recommend them.

I then asked ChatGPT another of my doctoral comprehensive exam questions, about the use of the trombone in various religious communities. ChatGPT produced a flowery commentary that sounds very impressive but is full of a remarkable number of errors of fact:

Once again, ChatGPT displayed its inability to cobble together a coherent history of the use of the trombone in a specific context. No, Bach did not “often” write for trombones, nor are trombones used in his Brandenburg Concerti or orchestral suites. But it was when ChatGPT began to elucidate on the Moravian community’s trombone tradition that it got into quicksand and could not get out. I asked my friend Stewart Carter (professor of music history, music theory, Collegium Music, and trombone at Wake Forest University, and co-editor of the Historic Brass Society Journal), a leading expert on Moravian music and how trombones were used in Moravian communities throughout history, to comment on what ChatGPT wrote:

The ChatGPT “essay” on trombones in religious traditions and contexts, including but not limited to J. S. Bach and Moravian communities, is at best inadequate, and at worst, simply wrong. Yes, J. S. Bach did use trombones in a few of his cantatas to support the vocal parts, but he did not write parts for trombones in any of instrumental works.

As to the Moravians, the sect was not founded in what is now the Czech Republic in the 18th century. The renewed Moravian Church (Unitas fratrum) traces its origin to Saxony, where Count Nicholas von Zinzendorf invited Protestant refugees from Bohemia and Moravia to dwell on his estates in the Lausitz region, where they founded the town of Herrnhut. The Moravians used trombones in small chamber ensembles and large orchestras only rarely. In fact, the early Moravians almost never had “large” orchestras. I know of no Moravian anthems that employ trombones. The principal use of trombones in early Moravian communities was to announce deaths and important arrivals to the community, to support the singing of chorales, at a funeral. They occasionally supported the singing of chorales in worship services, particularly the Easter Sunrise Service and the New Year’s Eve Watchnight Service.

Much has been made about Chat GPT’s ability to generate computer code on its own. But AI’s understanding of scientific principles— of, say, trombone acoustics and how sound is produced on brass instruments—is another matter entirely. I asked ChatGPT to answer a question posed by a colleague who is a music history professor (most trombone DMA committees include the trombone professor, another music professor, and a music professor who is a music theory or music history teacher). I included his question on comprehensive exams for doctoral students in trombone. The question references the acoustics of the trombone. Here’s the question and what ChatGPT wrote:

On first glance, this gives the impression of being a very comprehensive, informed answer. But when I asked my friend, Arnold Myers, one of the world’s leading experts on the subject of the acoustics of brass instruments (he is co-author, along with Murray Campbell, and Joël Gilbert, of The Science of Brass Instruments, ASA Press/Springer, 2022), to weigh in on ChatGPT’s understanding of trombone acoustics, Arnold took the essay apart piece by piece:

Arnold Myers commented: This is a bit misleading: blowing air through the instrument does not itself produce a sound, blowing is necessary solely to produce the lip buzz.

Arnold Myers commented: This is correct, and important.

Arnold Myers commented: The harmonic spectrum depends on the amplitudes of the standing waves of different frequencies rather than the positions of nodes and antinodes.

Arnold Myers commented: This is misleading: the bell flare is important in the radiation of sound and the timbre, but it does not amplify the sound. The bell does not add energy, it lets some of the energy of the standing waves escape.

Arnold Myers commented: Smaller bells are often more flared and less cylindrical (as in the French trombone).

Arnold Myers commented: It would be hard to define ‘focused’ or ‘concentrated’. The sound of a trombone played loudly has a very wide range of harmonics, containing frequencies extending up to and beyond the limits of human hearing, certainly not “narrow.” So does the sound of a french horn played loudly. It is the euphonium sound that has a more limited range of harmonic content.

Arnold Myers had a few more comments as well. It’s not enough that ChatGPT has something to say. It’s also important to note what it does not say:

Several important considerations are omitted. These include:A. The internal sound levels very high, reaching 140-150 decibels in fortissimo. The bell is selective, transmitting the high frequencies (a high pass filter) but reflecting most of the low frequency energy (necessary to build up standing waves).B. The timbre of the trombone depends on the dynamic level of playing. At low frequencies the timbre is mellow with little high frequency content, but at high dynamics a significant proportion of the sound energy is converted from low frequencies to high as sound waves travel from mouthpiece to bell (nonlinear propagation of sound). This results in the inevitable brassy sound (cuivré) at high dynamic levels (whether the player wishes it or not).C. The standing waves are strong enough to influence the vibration of the player’s lips. The buzz is shaped partly by the player’s voluntary muscular control and partly by the standing waves. This is experienced as ‘slotting’.

The reply to your question is well worded and comes over as authoritative, but it is not informed by the state of the art knowledge of brass instrument behaviour. If this had been turned in by one of my masters students, it would have received rather a low mark, not quite a fail. Quite a lot is correct, but important things are wrong (or missing). It gives the impression of being based on reading 50-year old books.

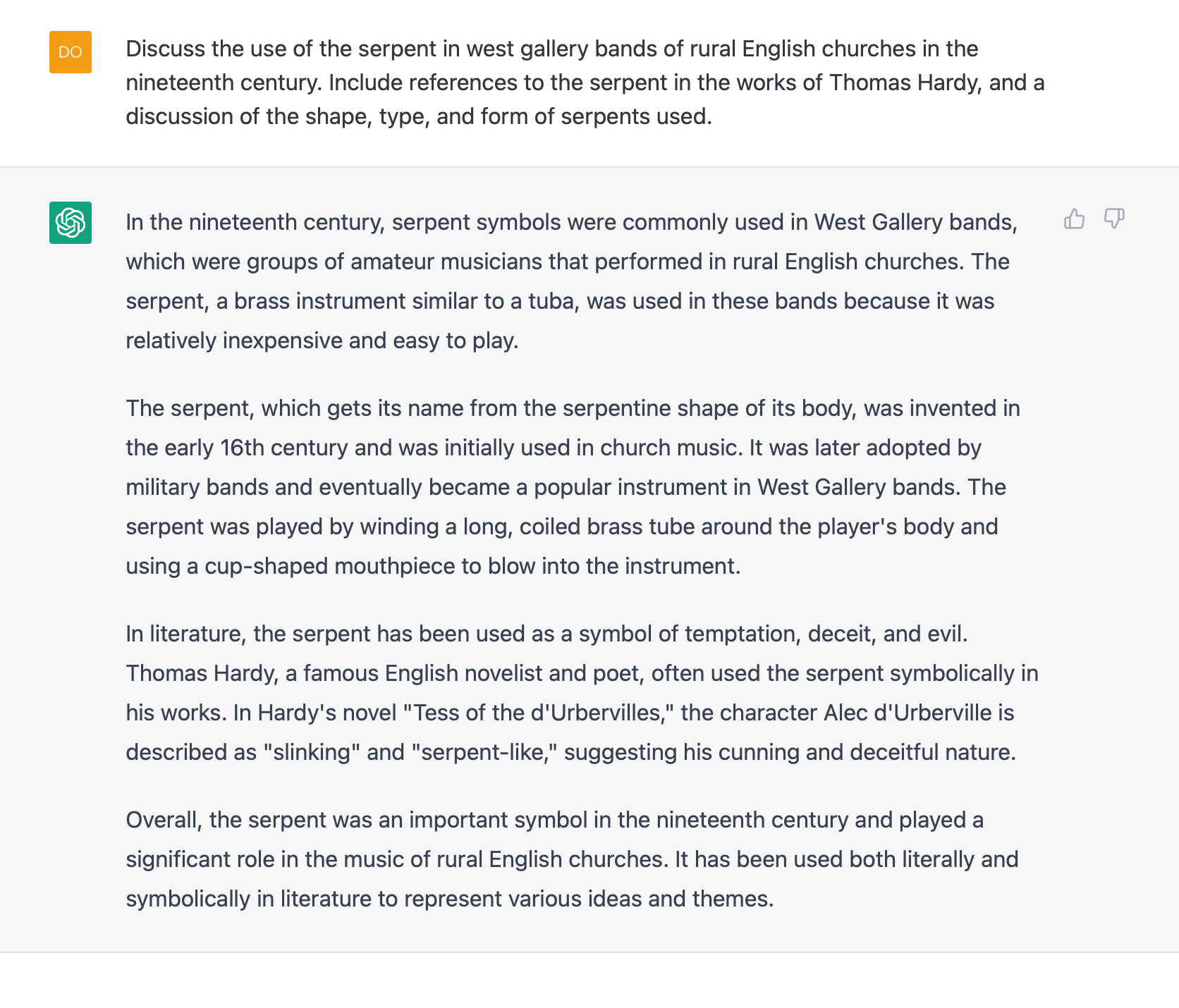

Old things pass away, ’tis true; but a serpent was a good old note: a deep rich note was the serpent.

I’m sure you must have had the occasional response to your blog in the way that I have to The Tuba Family, where someone has totally misunderstood something I’ve written to the extent of it’s very often having been taken as meaning totally the opposite of what’s intended. It can be very discouraging when you’ve tried your best to use clear and simple language. Our ChatGPT shows evidence of behaving like this someone. So, paragraph 1:

“serpent, a brass instrument.” My Webster has “brass . . .1. consisting of or made of brass.” All these years I’ve been under the impression a serpent’s almost always wooden, but then again . . .

“relatively inexpensive and easy to play.” Not that I’ve noticed—difficult to get your fingers round and all but impossible to pitch accurately. (Though maybe ChatGPT is an octopus with perfect pitch?)

Paragraph 2. Not the early 16th century: the late 16th century.

“The serpent was played by winding a long coiled brass tube around the player’s body.” You, boy, that chorister—roll up the sleeves of your cassock and grab the ends of this brass tube, that’s it, carefully. . . Now keep tight hold and walk carefully around me, holding it tightly. When it’s totally round my body don’t forget to cut me free otherwise I won’t be able to eat my dinner . . .

Paragraph 3. This is the real gem. ChatGPT has read somewhere that Thomas Hardy (was he the big fat one or the small thin one, by the way?) refers to the serpent in his novels. The only one Chat has seen wasn’t actually in a form that required reading but simply watching the screen, and this was Tess of the D’Urbevilles, so he has had to fit the serpent into this. All this symbollically stuff is nonsense. What Hardy does in no fewer than four short stories and, particularly in Under the Greenwood Tree which is based around a village band, is describe (very accurately) the way in which the serpent was used in such a band. (By the way, unusually there is no mention of a serpent in Tess – I’ve checked.) If Chat was really serious about symbolic serpents he could have referred to a much earlier book called The Holy Bible, specifically the chapter of Genesis, where the symbolic serpent really comes into its own.

Paragraph 4. I think the “important symbol” bit has been demonstrated to have been written about long before the nineteenth century.

So how would I grade this answer? It would be too cruel to grade it. I would take it along to my head of department with a request that he might send ChatGPT along to the school psychiatrist for assessment as this student is completely unable to benefit from my tuition owing to impaired mental faculties.

On the upside—the AI writing sample demonstrated good grammar and mechanics. The AI engine knows and applies basic punctuation, spelling, capitalization. The paragraph structure develops an introduction, exposition, and conclusion. Honestly, it’s cleaner than most college writing, or for that matter, most business communication. Give the technical elements an A.

Scored by various methods, the college-level paragraphs are moderately difficult to read. A good editor would fix the long sentences, the over-reliance on “to be” verbs, and the zombie nouns. The paragraphs are also wordy and repetitious (131 repeated words in a 260-word sample)—sounding like college students padding their word count. So give the reading comprehension a B-minus (allowing for grade inflation), but there’s a more obvious flaw—it’s boring. And no one reads boring, not even college professors (who claim to read student papers but really only skim).

Accurate content? Not so good. ChatGPT claims that Rodeheaver traveled with the evangelist Gipsy Smith (he didn’t), gives the wrong date for the founding of Rainbow Records (1920, not 1911), and says Rodeheaver wrote “hundreds” of songs (maybe 30, tops). When I asked Doug to try a more generic prompt (“Write about Homer Rodeheaver”), ChatGPT wrongly reported that he was born in Indiana. Another iteration asserted that Rodeheaver published Gospel Hymns No. 1–6 (an Ira Sankey hymnal, not Rodeheaver).

These factual errors are oddly authoritative and precise. Very few readers will recognize the name Gipsy Smith—it sounds so fantastic and exotic that it must be true, as does a very specific (and very wrong) claim about an 1894 hymnal.

As a followup, Kevin asked me to ask ChatGPT to write something about Rodeheaver based on a question had a mixture of factual and fictional information. Many commentators are breathlessly arguing that ChatGPT and other AI programs will soon be able to write college papers. But what if a student asks ChatGPT to do something that, unbeknownst to the student, is flawed from the start? What if the request for ChatGPT to write an essay has, as its premise, misinformation? What if the question itself contains an impossibility? Kevin submitted a question and I put it into the ChatGPT program. Here’s what happened:

Obvious errors abound, such as the assertion that Rodeheaver was born in Indiana (he was born in Ohio). And there’s still that spurious claim that Rodeheaver started Rainbow Records in 1911 (he founded the label in 1920). But Kevin’s new request to ChatGPT was infused with several obviously false claims. ChatGPT did not recognize this. ChatGPT’s answer, therefore, is hilariously funny—and so very wrong. ChatGPT could have said, “I cannot answer this question.” But it didn’t. It answered it by making up stuff. Making up outrageous stuff. Kevin summarized his thoughts on this through the lens of his skills as an accomplished teacher, researcher, writer, and editor:

Suspecting AI’s inherent flaw, I deliberately constructed a new writing prompt that asked about Rodeheaver’s career as a violinist and his famous collection of postcards (both claims wildly untrue). Sure enough, ChatGPT returned glowing paragraphs claiming “Rodeheaver played the violin…for various churches and evangelistic campaigns throughout the United States.” Then comes a whopper, ChatGPT claiming that Rodeheaver’s (nonexistent) postcard collection “was one of the largest and most comprehensive in the world…valued at over $50,000.”

Yes, fact checking gets an F here, but not just a zero marked in red at the top of the paper. This level of duplicity earns the student a slow walk toward the dean’s office.

What’s my point? At the exact moment that we’re buried in fake news, social media lies, and pseudo research from Wikipedia—at the exact moment we need more truth—we get pure fabrication burnished with academic jargon. And breathless news reporters who think it’s good.

ChatGPT has not yet overcome the original problem with computer programming: Garbage In, Garbage Out. For these new AI writing engines, a bad or imprecise query still yields unusually bad answers. And if you don’t know the answer, make it up.

How will teachers know when a student is using AI? For now, there’s one obvious tell—a pronounced gap between flawless mechanics and abysmal content. That’s not how it works in real life, where bad student writing is consistently bad—mechanics, research, fact checking, execution—all bad. In the olden days, teachers could spot plagiarism because of one jewel-like paragraph shining from a pile of dreck. That hasn’t changed. Given a long enough writing sample, the AI content is still pretty obvious.

While media gush about ChatGPT, even OpenAI Inc.’s founder Sam Altman, recognizes the limitations of ChatGPT:

ChatGPT is incredibly limited, but good enough at some things to create a misleading impression of greatness. It’s a mistake to be relying on it for anything important right now. It’s a preview of progress; we have lots of work to do on robustness and truthfulness.

Well, that is a truthful and honest statement. But a few months later, Altman, admitted that he is afraid of what AI will do and might become:

I think it’s be crazy not to be a little bit afraid, and I empathize with people who are a lot afraid. The current worries that I have are that there are going to be disinformation problems or economic shocks, or something else at a level far beyond anything we’re prepared for.”

And how about this: In a recent article (May 27, 2023) titled, “Here’s What Happens When Your Lawyer Uses ChatGPT,” the New York Times wrote about a person who recently sued an airline because he claimed to have been injured on a flight. His attorney turned to ChatGPT to write his brief. It was, from reports, a very well written brief, 10-pages long, and full of case law that was cited to support the plaintiff’s case. There was only one problem, as the Times reported: “ChatGPT had invented everything.” Seriously. AI just made up cases that were cited in the brief. All of the cases it cited were bogus. The attorney, who admitted that ChatGPT had written the brief—and he even asked ChatGPT to verify that the cases it cited were real; the AI program said, “Yes” even though it was making everything up—is facing more than a grade of F and trip to the Dean’s office:

Judge Castel said in an order that he had been presented with “an unprecedented circumstance,” a legal submission replete with “bogus judicial decisions, with bogus quotes, and bogus internal citations.” He ordered a hearing for June 8 to discuss potential sanctions.

Uh-oh.

Will AI improve over time? Of course. But will it ever replace the human mind, and the unique style of individual authors who have shown, throughout history, the remarkable ability to find and put together the right words? I don’t think so.

As Bern Elliot, a vice president at Gartner (a technology research firm), said when he recently summed up the current state of ChatGPT in an article on CNBC:

ChatGPT, as currently conceived, is a parlor trick. It’s something that isn’t actually itself going to solve what people need, unless what they need is sort of a distraction.

At the top of this article, I included a screenshot and link from Steven Marche’s article, “The College Essay is Dead.” As I conclude this essay, have a look at this headline, from an article by Gary Marcus in Scientific American:

Marcus concludes his article with this insightful observation about ChatGPT and other AI programs:

Large language models are great at generating misinformation, because they know what language sounds like but have no direct grasp on reality—and they are poor at fighting misinformation. That means we need new tools. Large language models lack mechanisms for verifying truth, because they have no way to reason, or to validate what they do.

“They have no reason to reason, or to validate what they do.” Despite the current cultural intoxication with AI, there is still—and there will always be—a place for human beings, we who have a soul and a conscience, we who live and act and breathe in the reality of space and time and experiences, and who are made “in the image of God” (Genesis 1:27).

The end of writing? That gives ChatGPT way too much credit. ChatGPT is being touted as a substitute for human thinking. There is danger afoot in this, friends, and amidst the breathless enthusiasm for artificial intelligence, ChatGPT is being exposed as a massive mis- and dis-information machine. A replacement for Google search? ChatGPT doesn’t tell you its sources. At least Google sends you directly to its source and once there, you can filter it and decide if it is reliable. A tool to use when writing essays? Not if it doesn’t cite its work so you can know its sources. Remember: when AI hallucinates, you might not know if it’s vomiting incorrect information, all decorated in flowery, plausible language. When you hand in a ChatGPT essay about the trombone and your teacher not only gives you an F but you are sent to the Dean’s office and face expulsion for plagiarism and making up stuff, you will find out the cost of taking a short cut can be very high. Keep studying, keep honing your craft. Conduct your own research; test your sources. You are better than ChatGPT. When approaching artificial intelligence, Caveat emptor.